How to Train AI With Use Cases?

AI (Artificial Intelligence) is now used in many things we see and use every day, like chatbots, smart apps, and online shopping. But for AI to work well, it needs to learn from the right data. The best place to get this data is from your own database. This data can include customer details, product info, orders, messages, and more. When you train AI using your own data, it learns to understand your business better and gives more helpful results.

In this blog, we will explain how to train AI with use cases using data from your database. You will learn how to prepare your data, pick the right AI tools, and teach the AI step by step.

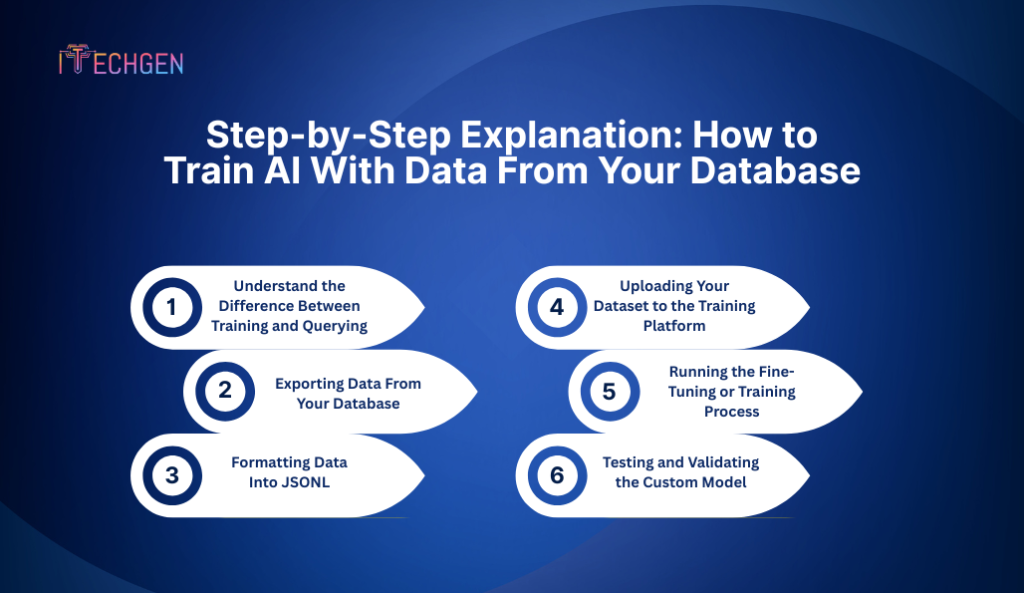

Step-by-Step Explanation: How to Train AI With Data From Your Database

1. Understand the Difference Between Training and Querying

Before diving into development, it’s important to understand the difference between training an AI model and querying your database. Many people assume that AI must be trained on every piece of information it uses. This is not always true. If your data is constantly changing or stored in structured tables (like SQL), you often don’t need to train the AI at all. Instead, the better approach is to keep the AI lightweight and make it fetch data from your system in real time when a user asks something.

Training, on the other hand, is required when you want the model to learn patterns or answer based on static, repeated, or complex examples. For example, customer support conversations, product manuals, or business processes can be used to train an AI to respond more effectively. If your use case demands AI to speak in your company’s voice, understand your workflows, or auto-generate responses without real-time lookups, then training makes sense. Otherwise, using the AI with dynamic queries is faster and more cost-efficient.

2. Exporting Data From Your Database

Before working with your own internal data, the first step is to export it from whatever database you currently have it stored in. This may be in a relational database such as PostgreSQL or MySQL or some other SQL Server. Depending on what you are processing the exporting process for, you may only want to export tables, or you may want to export filtered records or join records that contain only the information you want the AI to learn. For example, if your business is focused on online orders, you may want to export data around customers, orders, and product information for the AI to understand.

Data can be exported using simple SQL queries. You can write a SELECT statement to fetch relevant fields, apply WHERE clauses to filter rows, and save the results in a .CSV, .JSON, or .XLSX format. Exported data should be cleaned before further processing — remove duplicates, unnecessary fields, and sensitive information. This step ensures you’re working only with relevant and clean datasets. Most programming languages (e.g., Python, Node.js) also allow you to write scripts to automate this data export process on a schedule.

3. Formatting Data Into JSONL

Once the data are exported, the next step is to prepare the data in a way that is usable by machine learning systems or AI models. Most mid to large machine learning models will accept a JSONL file, which stands for JSON Lines. JSONL files are preferred for fine-tuning situations or in situations when processing examples in bulk. Each line in a JSONL file is either a single input-output pair with no nesting, which helps to make it very fast and easy to parse.

For example, if you exported your customer service questions and answers/solutions from your database, each line in your JSONL would represent a single question (in the prompt field) and a single answer (in the completion field). The following is a simplified view of this:

| json {“prompt”: “How can I reset my password?”, “completion”: “To reset your password, go to the login page and click on ‘Forgot Password’.”} |

You can write a script in Python or Javascript to format your .CSV or SQL file output to .jsonl. Just make sure the data are normalized & trimmed, not like any weird special characters or formatting problems. Proper format is essential to make sure the AI model will understand the logic of how the training samples function to help in determining a given prompt output.

4. Uploading Your Dataset to the Training Platform

Once your data is formatted properly, it needs to be uploaded to the system or platform where the AI model will be trained. Every machine learning platform provides a way to accept datasets either via a user interface or through API endpoints. Some platforms may have strict formatting rules, dataset size limits, or requirements for validation before you can start the training process.

You typically begin by authenticating with the platform and using their tool or SDK to upload your .jsonl file. After uploading, the system might perform a quick validation to check for any formatting errors. If errors exist, the system will notify you so you can correct them. It’s important at this stage to double-check that all prompts and completions are properly matched and the examples accurately reflect what you want the AI to learn.

Remember that once you upload data, it becomes part of the training context for the model. Avoid including sensitive or confidential information unless you are hosting and managing your model in a secure, private environment.

5. Running the Fine-Tuning or Training Process

Now that your dataset has been uploaded and is ready, you can start to train it. You will have to select a base model (this will depend on your platform) and gauge the number of training steps, the learning rate and some other settings along the way. Training can take from a few minutes to hours, depending on the size of your dataset and the model complexity.

Fine-tuning works because it helps the model retrain its internal weights based on the examples you provided. If you have hundreds of customer service questions in the training set and expected answers, it will be trained to respond to any of these questions presented to it, even if they are not worded the exact same way. If you have transaction data, then it can pick up on patterns in customer behaviors.

You will want to have logs or dashboards to observe and track the training process. Most platforms will also provide metrics such as accuracy, loss, and validation score, so you can see whether the model is learning the way you expect. If accuracy is low or loss is high, you may need to go back and improve your dataset.

6. Testing and Validating the Custom Model

Once training is complete, you should never deploy the model into production immediately. It’s essential to test and validate it thoroughly. Begin by feeding it test prompts that are similar but not identical to your training data. Evaluate whether the responses are logical, correct, and aligned with your expectations. You should also test the model with edge cases or incomplete inputs to see how it handles them.

If the model returns incorrect or vague answers, it may mean the training data was too small, too repetitive, or lacked clarity. In such cases, go back to your training dataset, improve the examples, and retrain the model. Validation is not just about getting correct answers — it’s also about tone, accuracy, and confidence.

In some cases, you may also perform A/B testing between your new model and an older version to compare performance. You can collect user feedback or log real interactions to continuously improve the model over time.

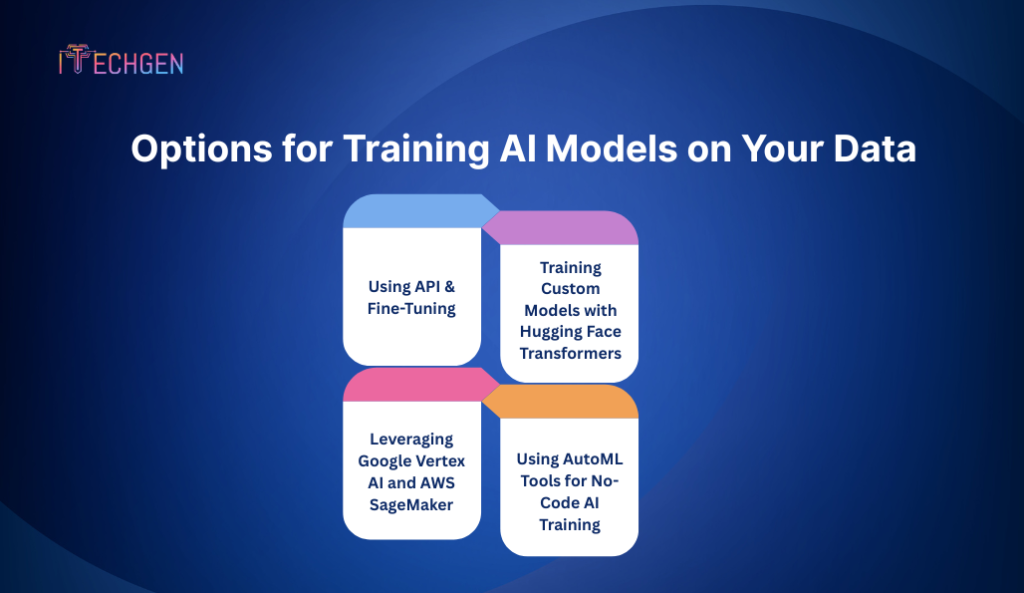

Options for Training AI Models on Your Data

1. Using API & Fine-Tuning (Chat-based Models and Text Completion Models)

One of the most accessible ways to train AI models today is through APIs offered by leading providers. These platforms allow you to fine-tune powerful language models using your own data with minimal infrastructure.

For example, current popular models that support fine-tuning include:

- GPT-4-turbo (o4): Offers advanced reasoning and conversational abilities.

- Text-davinci-003: Earlier text generation model, still used for lightweight tasks.

- Claude 3 (Anthropic): Known for its safety and context handling.

- Mistral models (Mixtral, Mistral 7B): Open-weight models offering efficient performance.

With APIs, you simply prepare your data in formats like JSONL, upload via CLI or web interface, and run fine-tuning jobs. These platforms typically handle infrastructure, scaling, and security. They are ideal for those who want conversational agents, customer service bots, or document-based assistants without building from scratch.

Use case examples:

- Fine-tuning a support bot with your company’s help desk tickets.

- Teaching a language model your product catalog for sales queries.

2. Training Custom Models with Hugging Face Transformers

Hugging Face is a popular open-source ecosystem for building and training custom models using the Transformers library. It supports thousands of pre-trained models that you can fine-tune or extend using your own datasets.

Latest models available for fine-tuning via Hugging Face:

- Meta’s LLaMA 3 (8B & 70B): High-performance open-source models.

- Mistral 7B / Mixtral 8x22B: Sparse expert models with competitive results.

- Falcon 180B: Suitable for large-scale reasoning tasks.

- Gemma (by Google): Lightweight, fine-tunable, and privacy-friendly.

- Phi-3 (Microsoft): Small footprint with strong performance for on-device AI.

You can train these models using Python and PyTorch or TensorFlow on cloud GPUs or your own hardware. Hugging Face also offers AutoTrain, a UI-based training tool for faster iteration.

Use case examples:

- Fine-tuning LLaMA 3 on legal documents for a legal advisor chatbot.

- Using Mixtral with a dataset of health queries to assist healthcare workers.

3. Leveraging Google Vertex AI and AWS SageMaker

For enterprises and developers needing full control, cloud ML platforms like Google Vertex AI and Amazon SageMaker offer scalable model training and deployment pipelines. These platforms support both built-in models and the ability to bring your own.

Google Vertex AI now supports:

- Gemini 1.5 Pro: Multimodal, capable of working with large contexts (1M+ tokens).

- PaLM 2: Previously used for conversational and code tasks (being phased out for Gemini).

Amazon SageMaker supports:

- Fine-tuning on Amazon Titan foundation models.

- Custom model training on open-weight models like LLaMA, Falcon, and Mistral.

These platforms let you orchestrate everything—from data preprocessing to model evaluation and hosting—within a single environment. While more complex, they offer flexibility, security, and integration with enterprise systems.

Use case examples:

- Training Titan on millions of customer feedback logs.

- Building a custom insurance claim processing model with Gemini on Vertex AI.

4. Using AutoML Tools for No-Code AI Training

If you’re a non-developer or looking for rapid prototyping, AutoML platforms provide a no-code or low-code interface to train AI using structured or unstructured data.

Top tools in 2025 include:

- Google Cloud AutoML: Now integrated with Gemini models for text and vision tasks.

- Microsoft Azure AutoML: Supports fine-tuning of Phi-3 and Claude APIs via drag-and-drop.

- H2O.ai: Offers open-source and enterprise versions for model training without deep ML knowledge.

- MonkeyLearn / Akkio / Obviously.ai: For training models on small business data without writing code.

These platforms handle preprocessing, feature selection, training, and evaluation automatically. While they may not match the flexibility of open-source libraries, they drastically reduce the technical barrier to entry.

Use case examples:

- Training a sales prediction model on Excel data.

- Building a classifier for customer reviews using drag-and-drop interfaces.

Real-World Use Cases for AI Training

At iTech Gen, we’ve observed several highly practical and effective ways AI is being trained using real-world data. These use cases demonstrate that AI doesn’t need to be flashy or experimental to be valuable—it just needs to be useful in solving day-to-day problems with the right data and logic behind it. Below are some standout examples where training AI models on private or internal data has produced meaningful business impact.

1. Answering Questions Using Data from SQL Databases

One of the most discussed and implemented use cases is training AI to respond to natural language questions using structured data from SQL databases. In this setup, the AI is not trained in the traditional sense but integrated with a database in a way that it can understand questions and convert them into SQL queries behind the scenes.

A backend service (commonly built using Node.js or Python) listens for user input, analyzes the intent, fetches relevant data from a PostgreSQL or MySQL database, and then presents that data to the AI model. The AI then formats the final response in clear, natural language.

For example, a business intelligence dashboard might allow users to ask, “What was our top-selling product last month?” The backend queries the database, gets the sales data, and the AI turns it into: “Your top-selling product last month was the Smart Fitness Watch with 2,100 units sold.”

This solution is especially useful for operations teams, managers, and customer support teams who don’t have SQL knowledge but need access to accurate data in real time.

2. Training AI with Internal Customer Support Data

Many organizations maintain years of email threads, ticket logs, and help center interactions. Training AI with this kind of data allows companies to build custom support bots or internal tools that mirror how their best agents respond.

In one implementation we reviewed, the training dataset included thousands of support tickets, each paired with a resolution response. These were formatted into prompt-response pairs (JSONL) and used to fine-tune a base model. The AI was then tested against common user queries and outperformed generic AI by delivering company-specific, policy-aligned answers with a tone that matched the brand’s voice.

This kind of training reduces response time, improves consistency in answers, and frees up human agents for more complex issues.

3. Personalized Product Recommendations

Another common use case is training AI models on historical purchase and browsing data to improve product recommendations. While large e-commerce platforms use advanced data science models, smaller businesses can train compact AI models using data from their own user base.

Using fields like customer ID, product ID, time of purchase, and browsing history, the business builds a dataset to identify buying patterns. This data is then used to fine-tune a recommendation model. Once trained, the AI can make smart suggestions like: “Customers who bought this also purchased…” or “Based on your past interests, here are some new arrivals you might like.”

Unlike traditional rule-based recommendation engines, AI trained on actual customer behavior can adapt faster and produce more relevant results.

4. Internal Document Search and Q&A Systems

Organizations often struggle with scattered internal documentation—HR policies, onboarding manuals, training guides, SOPs, and more. Training AI to search through this information and answer questions accurately has proven to be one of the most time-saving use cases.

The process typically involves:

- Collecting all internal documents.

- Splitting them into meaningful sections.

- Creating embeddings for each section (turning them into numerical vectors).

- Storing those vectors in a database.

- When a user asks a question, the system finds the most relevant document section and feeds it to the AI to generate an answer.

This setup is ideal for IT teams, HR departments, and legal advisors who handle recurring queries but don’t want to rewrite the same answers again and again.

5. Training AI on Financial Reports and Dashboards

Another powerful use case we’ve seen is training AI to assist with financial analysis. In this scenario, AI models are trained or prompted using financial reports, balance sheets, and income statements.

Finance teams integrate the AI with their internal financial dashboards or export reports from their accounting software. The AI is trained or instructed to interpret the meaning behind figures, trends, and ratios. It can then answer questions such as:

- “Why did Q2 profit drop compared to Q1?”

- “Which department exceeded its budget in March?”

This approach saves hours of manual data review and empowers non-financial users (like department heads) to get financial insights without needing spreadsheet skills.

Conclusion

Training AI with use cases using your own data is a powerful way to create smarter, more efficient systems tailored specifically to your business needs. Whether you’re building customer support bots, personalized recommendation engines, or internal document Q&A tools, the key lies in properly preparing your data, choosing the right training approach, and continuously validating your model’s performance. Ethical and privacy considerations should always guide your efforts to protect sensitive information while maximizing AI’s potential.

By understanding how to summarize a document with AI and other data-driven techniques, businesses can unlock faster insights, improve customer experiences, and make informed decisions—all without the overhead of building AI from scratch. With accessible tools ranging from API fine-tuning to AutoML platforms, anyone can leverage AI training today to transform data into actionable intelligence.

Pankaj Arora (Founder & CEO)

Pankaj Arora is the Founder & CEO of iTechGen, a visionary leader with a deep passion for AI and technology. With extensive industry experience, he shares expert insights through his blogs, helping businesses harness the power of AI to drive innovation and success. Committed to delivering customer-first solutions, Pankaj emphasizes quality and real-world impact in all his endeavors. When not leading iTechGen, he explores emerging technologies and inspires others with his thought leadership. Follow his blogs for actionable strategies to accelerate your digital transformation and business growth.

View More About Pankaj Arora