OpenAI API Cost : All You Need To Know

OpenAI’s API is used for all kinds of things — chatbots, writing tools, code helpers, even image generation. It works through models like GPT-3.5, GPT-4 Turbo, and now GPT-4.5. You send text, get a reply, and use that to build features into your app or website. Sounds simple — until the bill arrives. Most users don’t think much about the price at first. But over time, they notice one thing: it adds up fast. Whether you’re using it for content, support, or automation — the OpenAI API cost can catch you off guard if you’re not careful. To put things into perspective, as of May 2025, ChatGPT has nearly 800 million weekly active users and around 122.58 million daily users, highlighting just how deeply it has integrated into people’s daily routines. (source) In this blog, we’ll break down everything you need to know about OpenAI API costs. We’ll cover the different pricing models, factors that affect costs, and tips to manage your expenses effectively. Whether you’re a developer, a business owner, or just curious, this guide will help you navigate the pricing landscape with confidence.

How OpenAI API Cost Works

If you’re planning to use OpenAI’s tools regularly — whether for a chatbot, content generation, or app features — it’s important to know how the billing works. Many first-time users assume it’s a fixed rate. It’s not. You’re charged based on usage, and the more you use, the more it costs. This section will break down the two things that decide your bill: the pay-per-use model and how tokens are counted.

Pay-per-Use Model Explained

OpenAI doesn’t charge a flat fee. You only pay when you use the API — like a prepaid phone plan where every call, message, or MB used is counted.

Every time your app or tool sends a request to OpenAI, you’re charged for that request. The charge depends on how much text is involved — both what you send and what you receive.

There’s no fixed monthly plan. If you use it a little, your bill stays low. If your app handles hundreds of prompts daily, your monthly cost can shoot up. Many startups realize this only when they get their first invoice.

This model gives flexibility but also needs attention — especially if you’re scaling.

Tokens and Their Role in Cost Calculation

OpenAI doesn’t charge you for the number of words or characters. It charges for tokens. And tokens are not the same as words.

A token is a small chunk of text — usually 3–4 characters long. Some words are one token, some words break into two. For example:

- “Data” = 1 token

- “Understanding” = 2 tokens

- “Let’s build it now” = around 5 tokens

Now here’s the important bit: You get charged for both input and output tokens.

That means:

- You send a prompt = input tokens

- OpenAI gives a reply = output tokens

- Your cost = total of both

If you ask a small question but get a long reply, the reply costs more than your prompt. It adds up fast, especially with models like GPT-4 or GPT-4.5 that give detailed answers by default.

Let’s break it with an example:

- You send a 100-token prompt

- The model replies with 300 tokens

- Your total token usage = 400

- Multiply that by the model’s token rate — and that’s what you’re charged

| Text | Approx. Token Count |

| Hello there | 2 tokens |

| How are you doing today? | 6 tokens |

| Write a blog about mobile apps | 8–10 tokens |

| Full user prompt (with context) | 100–200 tokens |

| Detailed response | 300–800 tokens |

Also, not all models cost the same.

- GPT-3.5 Turbo is cheap and fast — best for basic tasks

- GPT-4 Turbo costs more, but gives better quality and can follow complex instructions

- GPT-4.5 is powerful but very expensive — not ideal for small, frequent tasks

Here’s a look at the latest pricing (as of May 2025):

| Model | Input Tokens per Million | Output Tokens per Million |

| GPT-3.5 Turbo | $0.50 | $1.50 |

| GPT-4 Turbo | $2.50 | $10.00 |

| GPT-4.5 | $75.00 | $150.00 |

(Source: OpenAI Pricing, May 2025)

That means:

- 1 prompt and 1 reply using GPT-3.5 might cost a few cents

- The same task using GPT-4.5 could cost you 20x more

Even small projects can quickly cross 1 million tokens if you’re not keeping count — especially with longer replies or history-based responses.

Also, adding system instructions like “Respond in a polite tone” or loading past chat history increases input tokens. Many users overlook this.

Pro tip: Even things like “System messages”, instruction guides, and long context history also count as input tokens. If you’re feeding previous conversation history to get better replies, you’re paying for all of it.

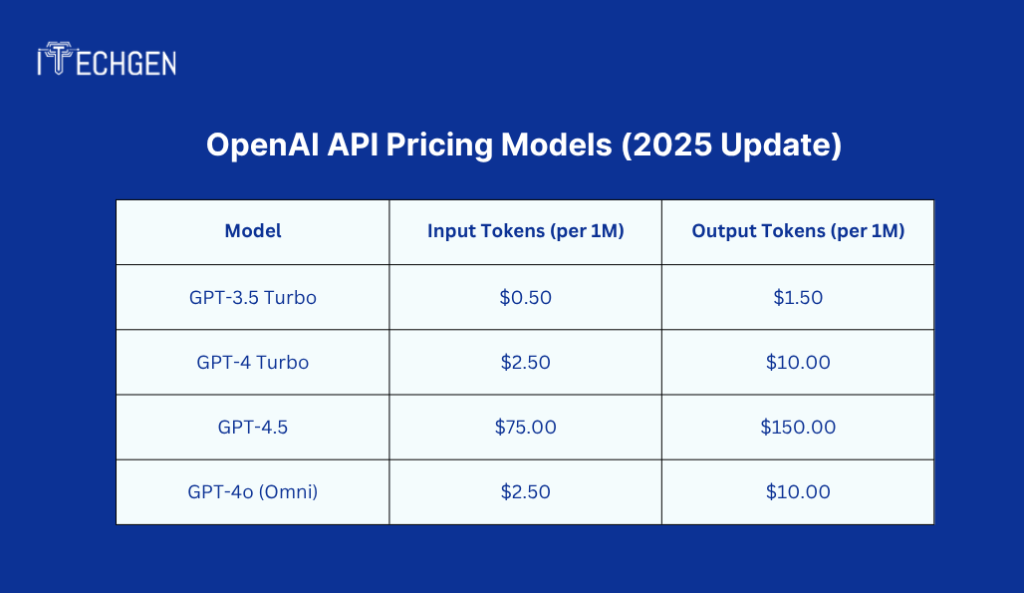

OpenAI API Pricing Models (2025 Update)

Choosing the right model isn’t just about speed or quality — it directly affects how much you’ll pay at the end of the month. The OpenAI API cost varies based on which model you pick and what you’re using it for. In 2025, OpenAI offers multiple models for text, image, and fine-tuning tasks — each priced differently. Let’s look at them one by one.

GPT-4, GPT-4 Turbo, GPT-3.5 Turbo Pricing

These are the most commonly used models for generating text, handling chatbots, writing content, or processing tasks in your app.

The OpenAI API cost for each of these models depends on the number of tokens processed — both in and out. The more complex the task, the more tokens used. And the more tokens you use, the more you pay.

Here’s the current pricing structure as of May 2025:

| Model | Input Tokens (per 1M) | Output Tokens (per 1M) |

| GPT-3.5 Turbo | $0.50 | $1.50 |

| GPT-4 Turbo | $2.50 | $10.00 |

| GPT-4.5 | $75.00 | $150.00 |

| GPT-4o (Omni) | $2.50 | $10.00 |

Source: OpenAI Pricing – May 2025

Let’s say your product sends 100K prompts and gets long responses.

If you’re using GPT-3.5, your OpenAI API cost might stay under $10.

With GPT-4.5, the same traffic could cross $500.

That’s why model selection matters. GPT-4.5 is the most powerful, but it’s also the most expensive — and it’s not always needed for basic tasks.

Embeddings & Fine-Tuning Costs

If you want your app to remember previous interactions, search large databases, or deliver tailored results — embeddings and fine-tuning come into play. These features help personalize outputs and make your product feel “smarter,” but they come with their own price.

The OpenAI API cost for embedding and fine-tuning tasks depends on how many tokens you’re processing and which model you’re using for training or querying.

Here’s a basic cost breakdown:

| Task | Price per 1K tokens |

| Text embeddings | $0.0001 – $0.0004 |

| Fine-tuning (GPT-3.5 base) | $0.003 – $0.012 (train) |

| Using fine-tuned model | $0.006 – $0.012 (query) |

Source: OpenAI Fine-Tuning Docs

You’re not just paying to train the model. Every time you use your fine-tuned version, you pay again — based on how many tokens it processes. This can double or triple your OpenAI API cost over time if not planned carefully.

For apps with limited budgets, pre-trained models might be enough. For large systems that need custom logic (like legal chatbots or domain-specific tools), fine-tuning can be worth the investment.

DALL·E Image Generation Pricing

If you’re building features that need on-the-fly images — like design tools, concept previews, or product ads — DALL·E is OpenAI’s tool for that. It can turn text prompts into visuals instantly. While the feature is powerful, the cost depends on image size and edits.

Here’s how OpenAI charges for image generation through DALL·E:

| Image Type | Price per Image |

| 256×256 (Low Res) | $0.01 |

| 512×512 (Medium Res) | $0.02 |

| 1024×1024 (High Res) | $0.04 |

| HD with editing tools | $0.17+ |

Source: OpenAI Pricing – Image

Simple visuals won’t cost much, but if your app is generating 1000+ images daily — your OpenAI API cost can increase fast, especially if you’re using higher resolutions or image editing tools (like inpainting or outpainting).

Also, every retry or tweak counts as a new generation — even if it’s just a small change. So bulk generations or iterative designs can eat into your budget quickly if not managed smartly.

Factors Affecting OpenAI API Costs

The OpenAI API cost isn’t fixed. It depends on how you use it — what you send, which model you choose, and how much context you feed into every call. Even small tweaks, like storing chat history or switching models, can silently increase your monthly bill.

Let’s break down the top three cost drivers.

Input vs. Output Tokens

When you use OpenAI’s API, you’re charged for two things — what you send (input tokens) and what you get back (output tokens). And the cost structure is not equal. Output tokens are always more expensive.

For example, if you send a short prompt like:

“Give me 5 subject lines for a sale.”

That may use only 10–20 tokens. But if the model replies with detailed, long subject lines with explanations, the output could easily go over 150–200 tokens. You’re charged for the total — both input and output — in every call.

Here’s what that looks like in pricing:

| Model | Input (1M tokens) | Output (1M tokens) |

| GPT-3.5 Turbo | $0.50 | $1.50 |

| GPT-4 Turbo | $2.50 | $10.00 |

| GPT-4.5 | $75.00 | $150.00 |

This matters most when you’re building apps that respond to users all day — like email generators, chatbots, or helpdesk tools. Each word adds up.

Model Selection (Cheaper vs. Advanced Models)

Different models have different pricing. GPT-3.5 is light and fast. GPT-4 Turbo is more refined. GPT-4.5 is high-end — and heavy on your wallet.

| Model | Best For | Monthly Cost (1M in + 1M out) |

| GPT-3.5 | Basic copy, chats, small tools | ~$2.00 |

| GPT-4 Turbo | Complex replies, smart logic | ~$12.50 |

| GPT-4.5 | Deep analysis, enterprise use | ~$225.00 |

Source: OpenAI Pricing – May 2025

Now here’s what happens in real projects: teams start with GPT-3.5, but switch to GPT-4.5 thinking it’ll give “better results.” The result? A 100x jump in OpenAI API cost without checking if the upgrade actually improved output quality. For many use cases, GPT-3.5 is still more than enough.

Tip: Try different models side by side and check the results. Don’t upgrade unless it brings real value.

Context Length Impact

This one slips under the radar.

Let’s say you’re building a smart assistant. You want it to “remember” the last few messages — so it can reply more naturally. That memory? It comes at a cost. Every past message you send again as part of the conversation counts as input tokens — even if the user doesn’t see it.

Here’s how it builds up:

| Scenario | Estimated Tokens |

| Just current question | ~50–100 |

| With a few earlier responses | ~300–500 |

| With long memory (multiple turns) | ~700–1000+ |

One dev team shared this on Reddit — their app was running fine, with bills around $30/month. Then they added a memory feature that included past chats in each new response. The token usage quietly went up. Same traffic, same number of users — but the monthly cost shot up to $180 without warning. Nothing broke technically, but their pricing did.

So yes, adding memory improves user experience — but it also increases your OpenAI API cost with every message.

Tip: Only keep the last 1–2 messages unless full context is absolutely necessary.

Comparing OpenAI API Costs with Alternatives

Before committing to OpenAI, it’s worth checking how it stacks up against other popular tools. Whether you’re running a business, building a SaaS product, or just testing an idea, your choice of provider will directly affect cost, performance, and control. Let’s break it down.

vs. Anthropic Claude

Claude is positioned as OpenAI’s closest competitor. It works in a similar way — you send a prompt, it gives a reply — but the pricing and behavior are slightly different.

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

| Claude 3 Haiku | ~$0.25 | ~$1.25 |

| Claude 3 Sonnet | ~$3.00 | ~$15.00 |

| Claude 3 Opus | ~$15.00 | ~$75.00 |

Source: Anthropic Pricing – 2025

Compared to the OpenAI API cost, Claude is more affordable on its smallest model (Haiku). It’s fast and decent for lightweight content or summaries. But Claude’s larger models (Opus) are nearly as pricey as GPT-4.5 — without the same developer ecosystem.

Another difference is context size. Claude accepts more tokens per request (up to 200K), which means it can handle long documents without chunking. That’s great for legal tech or academic tools — but again, you’ll pay for every token it processes.

Verdict: If your work involves large documents or summaries, Claude can save cost. But for coding or multi-turn chats, OpenAI still performs better.

vs. Google Gemini

Google’s Gemini models are picking up fast — especially on Android devices and inside Google Search. Gemini Ultra is their top-tier model, built for advanced tasks. But unlike OpenAI, Google hasn’t shared clear API pricing yet. Most developers get access to Gemini Pro 1.5 for free via Google AI Studio, while Ultra is reserved for enterprise use (as of 2025).

| Model | Google Pricing (2025) | Notes | OpenAI Equivalent |

| Gemini Pro 1.5 | Free via Google AI Studio | Limited access, generous quota | GPT-3.5 Turbo / GPT-4o |

| Gemini Ultra | Not publicly available | Enterprise only, high-end model | GPT-4 Turbo |

For now, the Gemini API is still in early phases for most developers. But if you use Google’s ecosystem (Docs, Gmail, YouTube, etc.), it might offer better integration than OpenAI.

When it comes to OpenAI API cost, OpenAI gives more clarity and flexibility for paid usage. Gemini is still evolving. It’s free for now, but may introduce token-based billing later.

Verdict: Great for Google users and basic AI tasks. But for custom tools, OpenAI still wins on documentation, control, and model maturity.

vs. DeepSeek

DeepSeek is one of the newer players — often mentioned in Reddit dev circles for being open-source and Chinese-backed. It’s positioned as a cheaper, transparent LLM with coding and multilingual capabilities.

| Model | Approx. Pricing (as of 2025) | Focus Area | OpenAI Equivalent |

| DeepSeek Coder | Free (open-source) | Code generation, dev tools | GPT-3.5 Turbo / GPT-4o |

| DeepSeek-VL | Not publicly listed (as of now) | Multimodal (text + image handling) | GPT-4o with vision |

| Claude 3 Opus | ~$15 per 1M tokens | High-quality writing, analysis | GPT-4 Turbo |

| Claude 3 Sonnet | ~$3 per 1M tokens | Balanced performance + speed | GPT-3.5 Turbo |

| Gemini 1.5 Pro | $0.50–$1.50 per 1K input tokens* | Multimodal, strong reasoning | GPT-4 Turbo / GPT-4o |

While OpenAI offers polished APIs and extensive documentation, DeepSeek focuses more on open access and experimentation. If you’re looking for budget-friendly coding support, DeepSeek can be a good choice — especially for self-hosting.

But be ready to deal with limited support and a smaller community. If something breaks, you’ll be on your own.

Verdict: Best for developers who want full control, don’t mind tweaking, and need something low-cost or local. For business-ready tools, OpenAI still has the edge.

vs. Self-hosted LLMs

Running your own LLM — like LLaMA 3, Mistral, or DeepSeek — can save a lot of money in the long run, but comes with its own headaches.

| Option | Cost to Run | Pros | Cons |

| LLaMA 3 (7B) | $20–$100/month (cloud or local GPU) | Full control, no per-call cost | Setup, security, scaling |

| Mistral | Open-source, light | Fast, efficient | Fewer features, less accurate |

| GPT-J/GPT-NeoX | Free to run | Community-supported | Heavy infra, lower quality |

With OpenAI, you pay based on usage — and the OpenAI API cost is predictable if monitored. With self-hosted models, your cost is mostly in infrastructure (servers, GPUs, electricity). But you’ll need to manage updates, security, performance, and scaling yourself.

Self-hosting works best for:

- High-volume traffic apps

- Privacy-sensitive tools

- Products with a fixed prompt style

But if you just want to ship fast or test ideas, the time spent setting things up often outweighs the savings.

Verdict: Best for large teams, AI startups, or security-first projects. Otherwise, OpenAI remains the easiest to plug and play.

Tips to Reduce OpenAI API Costs

If you’re using OpenAI regularly — whether for content tools, chatbots, or automations — your bill can creep up faster than expected. But the good news is: you don’t need to reduce your output to control the cost. Just being smart about how you send prompts, what you cache, and which model you pick can make a big difference.

Here’s how to bring down your OpenAI API cost without compromising on quality.

Optimizing Prompts to Reduce Token Usage

The API charges you based on how many tokens you use — not how many tasks you complete. So if your prompts are long-winded or repetitive, you’re burning tokens for no reason.

Let’s take an example:

| Prompt Style | Estimated Tokens |

| “Can you please help me summarize…” | $12.00 |

| “Summarize this:” | $3.00 |

Now imagine you’re running this call a thousand times a day. That small difference becomes hundreds of thousands of tokens per month.

Same applies for outputs. If you’re not limiting how long the model replies (with max_tokens), it may return long, chatty answers that cost more — especially with expensive models like GPT-4.5.

Quick Fixes:

- Remove filler words from prompts

- Set a max token limit for replies

- Use specific instructions to avoid long, vague responses

Reducing prompt size by even 20–30% can noticeably cut your OpenAI API cost — without changing your results.

Caching Responses

If your app handles repeated queries — like common FAQs, product descriptions, or template-based answers — there’s no need to call the API every single time.

For example:

“What’s your return policy?”

“What is Flutter?”

“How much does GPT-4 cost?”

These don’t need fresh answers each time. You can store them (with context) and just serve cached versions when asked again.

Here’s what caching can save:

| Scenario | API Cost Without Caching | API Cost With Caching |

| 10,000 FAQ calls/month | $15–$200+ (model-dependent) | $0–$5 (only for edge cases) |

| Blog summaries reused | $50+/month | $5 or less |

Implementation ideas:

- Store common outputs locally or in a database

- Use Redis or simple file-based caching for quick lookup

- Add a timestamp for how “fresh” each cached response is

For high-traffic apps, caching is often the single biggest way to reduce OpenAI API cost.

Choosing the Right Model for Your Needs

It’s tempting to use GPT-4.5 for everything — it sounds smarter, it writes better, it reasons well. But it also costs 100x more than GPT-3.5 for the same task in many cases.

Here’s a quick look at the OpenAI API cost for models:

| Model | Cost per 1M Tokens (Input + Output) | Best For |

| GPT-3.5 Turbo | ~$2.00 | Generic tasks, fast replies |

| GPT-4 Turbo | ~$12.50 | Complex content, QA bots |

| GPT-4.5 | ~$225.00 | Deep reasoning, sensitive use cases |

Use GPT-3.5 if:

- You’re doing summaries, short answers, or SEO tasks

- The tone and logic don’t have to be perfect

Use GPT-4 Turbo if:

- You’re building tools where tone, grammar, and context matter

Use GPT-4.5 only if:

- You need detailed logic, long-term memory, or fine-tuned results

Pro Tip: Run A/B tests. Generate results from different models and compare. If your users don’t notice the difference, you’ll save a lot by dropping to a lighter model.

OpenAI API Free Tier & Rate Limits

If you’re just starting with the OpenAI API or building a tool on a budget, understanding the free tier and usage limits is key. A lot of users assume the API is free to try — but that’s no longer the case. OpenAI has shifted to a prepaid model, where you need to add funds before using the service. On top of that, rate limits decide how much you can send or receive every minute.

This section breaks down what’s included in the base tier, what happens after you pay, and how to avoid surprise bills when usage spikes. Knowing these details upfront can save you time, money, and a lot of backend debugging later.

Free Tier Eligibility & Limits

OpenAI’s free tier has evolved over time. Previously, new users received complimentary credits to explore the API. However, as of now, OpenAI no longer offers free credits upon sign-up. To begin using the API, users are required to add a minimum payment of $5 to their account.

Once you’ve added funds, your account is placed in Tier 1, which comes with specific rate limits:

| Tier | Minimum Payment | Rate Limits (Requests per Minute / Tokens per Minute) |

| 1 | $5.00 | 20 RPM / 40,000 TPM |

To access higher tiers with increased limits, additional payments and time-based criteria apply.

How to Avoid Unexpected Charges

Managing API usage is crucial to prevent unforeseen expenses. Here are some actionable steps:

- Monitor Usage Regularly: Keep an eye on your API consumption through the OpenAI dashboard. This helps in identifying unusual patterns early.

- Set Hard and Soft Limits: Implement usage caps within your application to prevent exceeding your budget. Soft limits can trigger warnings, while hard limits can halt usage.

- Secure Your API Keys: Ensure that your API keys are stored securely and not exposed in client-side code or public repositories. Unauthorized access can lead to significant charges.

- Optimize Prompt Design: Craft concise prompts to reduce token usage. Avoid unnecessary context or verbose instructions.

- Implement Caching Mechanisms: For repetitive queries, cache responses to minimize redundant API calls. This not only saves costs but also improves response times.

- Choose the Right Model: Select a model that aligns with your needs. More advanced models are costlier; if a simpler model suffices, opt for that to save on expenses.

By proactively managing your API usage and understanding the associated costs, you can harness the power of OpenAI’s tools without unexpected financial surprises.

How to Estimate Your OpenAI API Costs

Understanding the actual OpenAI API cost for your project starts with one thing — token math. Since OpenAI charges per token, even a simple prompt can add up if you’re not careful. Whether you’re building a chatbot, generating emails, or summarizing documents, knowing how many tokens you’re using is the key to avoiding bill shocks.

In this section, you’ll learn how to use OpenAI’s free tokenizer tool and explore real examples to see how costs play out across use cases.

Using OpenAI’s Token Calculator

OpenAI offers a free tool called the Tokenizer. You can copy-paste any text into it and see how many tokens it takes up — before you run it through the API.

Why this matters:

- You’re charged for both input and output tokens.

- More tokens = more cost.

- Even short prompts can balloon when you add context or conversation history.

Let’s break this down with a quick table:

| Prompt Task Description | Approx. Token Count | Input Cost (per 1K tokens) | Output Cost (per 1K tokens) | Estimated Total Cost |

| “Summarize this email:” | 200 tokens (150 in + 50 out) | $0.005 | $0.015 | $0.00165 |

| “Summarize the following 800-word article…” | 800 tokens input + 200 output | $0.005 | $0.015 | $0.00550 |

| “Create Instagram captions for 5 product photos” | 300 tokens total | $0.005 | $0.015 | $0.00240 |

| “Generate a 1000-word blog draft on AI in marketing” | 150 tokens input, 1300 output | $0.005 | $0.015 | $0.02000 |

The tokenizer tool helps you estimate this before you start calling the API, so you can trim the fat from prompts and save on costs.

Real-World Cost Examples

To make things clearer, here’s what typical use cases might cost using GPT-4o (as of 2025):

| Use Case | Prompt Size (tokens) | Response Size (tokens) | Total Tokens | Approx. Cost |

| Simple Q&A chatbot | 40 | 60 | 100 | ~$0.00075 |

| Email writer | 80 | 150 | 230 | ~$0.0025 |

| Product description generator | 60 | 100 | 160 | ~$0.0015 |

| Long-form blog generation | 300 | 800 | 1100 | ~$0.0125 |

These numbers may look small, but at scale, it stacks up. For instance, a support bot handling 10,000 daily chats with 100 tokens each could cost over $7/day, or $210/month — even before counting advanced model surcharges or memory features.

So before launching any tool on top of the API, make sure to estimate your OpenAI API cost properly. Trim prompts, test response sizes, and plan around volume to stay on budget.

Billing & Managing OpenAI API Usage

If you’re using OpenAI APIs regularly, keeping track of your usage isn’t optional — it’s essential. You don’t want surprise bills or guesswork when budgeting. Luckily, OpenAI provides simple tools to stay in control of what you spend and how it’s used. From setting up alerts to checking usage logs, everything is designed to help you manage the OpenAI API cost without stress.

Setting usage alerts & limits

One of the easiest ways to manage OpenAI API cost is by setting usage limits and budget alerts. OpenAI lets you define a hard spending cap so your charges don’t go beyond what you can afford.

You can do this directly from your account dashboard:

- Usage limit: This sets a ceiling on how much you can spend. Once this limit is reached, your access pauses automatically.

- Email alerts: You can opt-in to get emails when your usage reaches 50%, 75%, and 100% of your budget.

Example: If your monthly limit is $20, you’ll get emails at $10, $15, and $20. It’s a simple way to prevent overspending.

Where to set it:

- Go to OpenAI’s Billing Page

- Click on “Usage Limits”

- Add your budget cap in dollars

Many developers don’t set these early, and that’s where mistakes happen. A few background API calls or misconfigured loops can eat up tokens fast. Alerts help catch it before things go off track.

Monitoring API consumption

Apart from alerts, tracking how your tokens are used day-to-day helps you find patterns, fix bloated prompts, and cut costs. OpenAI gives you access to:

- Daily token usage logs

- Breakdown by model (e.g., GPT-4, GPT-3.5)

- Logs filtered by dates and endpoints

You can view this data here: OpenAI Usage Dashboard

Why it matters: Sometimes, it’s not one big project burning your budget — it’s multiple small requests adding up. We’ve seen teams lose track when usage was spread across different tools, like internal chatbots and testing environments.

Here’s a quick view of how monitoring helps lower OpenAI API costs:

| What to Monitor | Why It Helps |

| Daily usage | Spots unexpected spikes in real-time |

| Model used (GPT-4 vs 3.5) | Lets you downgrade where premium isn’t needed |

| Token size per request | Helps reduce bloated prompts |

Monitoring isn’t just for finance teams. Product managers, dev leads, and even solo builders should review their usage at least once a week. It keeps surprises away and decisions data-driven.

Future of OpenAI API Pricing

The pricing of OpenAI’s API is expected to evolve as usage patterns shift and new models continue to roll out. Just like we’ve seen the jump from GPT-3.5 to GPT-4, and now GPT-4o — each new release introduces different cost structures, use cases, and target audiences.

OpenAI will likely move toward a more granular, tier-based pricing model, where specific use cases — like image generation, embeddings, or memory-enabled agents — have separate pricing logic. We may also see expanded options for:

- Subscription-style pricing for businesses with steady monthly usage

- Credits for startups or low-resource use cases

- Heavier cost on premium features (like extended context windows or advanced memory)

Another trend to watch is pricing based on latency or priority access. Businesses that need faster replies (like real-time chat tools) may pay more for premium endpoints, while bulk processors (like batch content jobs) may opt for lower-cost, high-latency plans.

Also, the competition from Claude, Gemini, and open-source LLMs is pushing OpenAI to offer more flexibility. The GPT-4o release — priced lower and running faster — is a clear sign of that shift.

To avoid surprises in OpenAI API cost changes, stay connected to the official announcements. Here’s how:

- Check OpenAI’s pricing page regularly: They list updated token rates, changes in models, and new services there.

- Join the OpenAI newsletter: Updates, early feature rollouts, and usage tips often drop here first.

- Follow OpenAI on Twitter or Threads: New model launches or beta programs are usually teased there.

- Watch developer communities: Subreddits like r/OpenAI, Twitter/X spaces, and Discord servers are often where upcoming pricing changes are first spotted or debated.

Also, if you’re part of OpenAI’s partner or enterprise programs, you’ll typically receive early heads-up through your account rep. But even for individual developers, OpenAI’s documentation and blog give strong clues on what’s coming next.

The key takeaway? If your product or business depends on OpenAI’s tools, don’t just track costs — track the roadmap. Changes are coming faster than ever. And the earlier you adapt, the better control you’ll have over your OpenAI API cost in the long run.

Conclusion

If you’re planning to use OpenAI in 2025 — whether for content, chatbots, tools, or automation — understanding the OpenAI API cost is no longer optional. It’s the difference between building something sustainable and burning through a budget without realizing.

From prompt sizes to token usage, model choices to memory handling — small decisions make a big impact on cost. This blog gave you a complete picture of how OpenAI’s pricing works, what factors affect it, how it compares to competitors, and most importantly — what you can do to reduce expenses.

Here’s the quick recap:

- Choose your model wisely. GPT-4o is fast and affordable for most use cases.

- Use OpenAI’s token calculator to estimate and plan your usage.

- Monitor usage weekly to stay in control.

- Set alerts and limits before you start any new project.

- Test prompt length and context size — even a few hundred tokens extra per request can double costs.

OpenAI is moving fast. Prices, models, and features will keep changing. The best way to stay ahead is to keep testing, keep learning, and build lean from day one.

If you’re serious about scaling smart — bookmark the usage dashboard, stay active in dev communities, and treat “token cost” like a line item in your business plan. It’ll save you time, money, and frustration in the long run.

FAQs

1. Is there a free tier for the OpenAI API?

Yes. OpenAI usually offers a free trial with limited credits when you create a new account. As of 2025, new users get $5 in credits valid for 3 months. Once used, you must switch to a paid plan to continue using the API.

2. How much does GPT-4 Turbo cost per token?

As of 2025, GPT-4 Turbo is priced at $0.01 per 1,000 input tokens and $0.03 per 1,000 output tokens. This makes it significantly cheaper than GPT-4.5 or GPT-4o.

3. What’s the most cost-effective model for daily use?

GPT-3.5 Turbo is the most budget-friendly option. At just $0.0005 per 1,000 input tokens and $0.0015 for output, it’s ideal for basic chatbots, content drafts, or quick internal tools.

4. Can I estimate OpenAI API costs before launching?

Yes. Use the official OpenAI tokenizer to test your prompt length. Pair it with the pricing chart to estimate how many tokens your task will use — and what it will cost.

5. How do I avoid high OpenAI API bills?

Start by setting budget limits, tracking usage weekly, and optimizing prompts. Caching frequent responses and downgrading to cheaper models where accuracy isn’t critical also helps keep OpenAI API costs low.

Pankaj Arora (Founder & CEO)

Pankaj Arora is the Founder & CEO of iTechGen, a visionary leader with a deep passion for AI and technology. With extensive industry experience, he shares expert insights through his blogs, helping businesses harness the power of AI to drive innovation and success. Committed to delivering customer-first solutions, Pankaj emphasizes quality and real-world impact in all his endeavors. When not leading iTechGen, he explores emerging technologies and inspires others with his thought leadership. Follow his blogs for actionable strategies to accelerate your digital transformation and business growth.

View More About Pankaj Arora